Automated driving (AD) will ultimately make driving more convenient and safer. McKinsey consultants predict that autonomy level 5 could be reached by 2030, meaning that AD technology will be available anytime, anywhere and that vehicles can drive themselves without a person being onboard. To successfully and safely realize automated driving, connected cars are equipped with HD maps and sensors such as ultrasound, radar, cameras, and lidar. This was also one of the central themes at the ELIV-Congress (ELIV stands for Electronics in Vehicles), which took place in Bonn on October 20 and 21 and is organized by the VDI. The event has become a must-attend event for all decision makers and experts in the automotive electronics and software industry.

But despite massive improvements in AD, most driving today is still done manually. How can advanced navigation systems take advantage of available HD map data and vehicle sensors and use them for other features, for example when the vehicle is outside its Operational Design Domain (ODD)? How does visual inertial odometry combined with HD lane and landmark information lead to a precise 6-degree-of-freedom (DoF) pose as the key to accurately projecting navigation and guidance context into an Augmented Reality (AR) scene? This can support driver navigation and guidance, for example, to select the right lane to reach their destination when the car is approaching an intersection, an exit, or gets close to a lane merge situation. Another example is a warning to slow down because other road users are obstructing the planned trajectory.

Precise calculation of vehicle movements

Augmented Reality (AR) features that can display navigation guidance are attracting increasing attention. Visual inertial odometry combined with HD lane and landmark information can lead to a precise 6-DoF (x, y, z, roll, pitch, yaw) pose in this context. This pose can not only be used for automated driving applications, but also required for precise AR guidance applications. The interaction of visual odometry information with inertial measurement data enables precise calculation of a vehicle’s relative motion. This information is then to adjust static camera calibration values so that the roll, pitch, and yaw are handled appropriately when mapping navigation guidance announcements to the AR scene. To correctly map global navigation information to the AR scene, we also need a precise (x, y, z) position. Only then can AR guidance be displayed correctly over the reality in front of the driver and provide good guidance. One example might be overlaying arrows over the lanes and road ahead a driver can then follow to her or his destination.

To approach this important topic, we will first address the basics of HD map data in this blog post. Another second post will then look at how visual inertial odometry algorithms help to generate a precise 6-DoF pose and how advanced navigation systems can use HD map data and HD positioning to make driving safer and more convenient.

Basics of HD map data

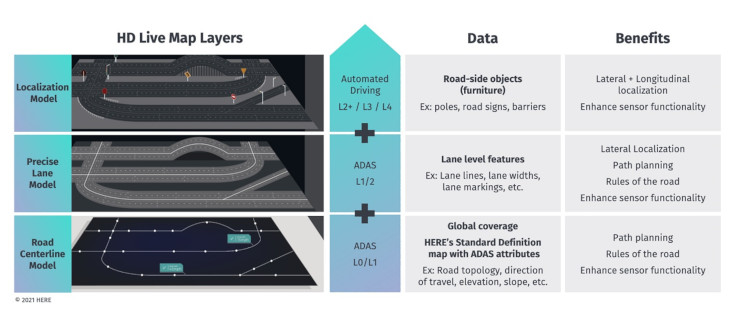

Map data acts as an additional “sensor” that supports with overcoming some of the limitations of the different perceptions systems of an AD solution. If a vehicle uses an electronic horizon based on map data, it can understand the road in more detail and see farther ahead than its sensors. It can also understand its surroundings even when sensor visibility is limited by weather, other road users, or objects. A map-based electronic horizon allows for more efficient driving while maintaining driver and passenger comfort. An electronic horizon that relies on HD map data also helps the vehicle with continuously maintaining its exact position. Map providers such as HERE build HD maps with three clusters of data layers. The HERE HD Live Map, for example, is a cloud-based service that consists of multiple tiled map layers that are highly accurate and updated to support connected AD solutions. The layers are logically divided into a road centerline model, an HD lane model, and an HD localization model (see image).

Road Model, HD Lane Model, and HD Localization Model.

Now let’s discuss the three models.

1. The Road Model Layer of the HD Live Map provides data farther away than the sensors can see. It also contains data that the sensors cannot see because the information is either not signposted or not visible. This layer helps vehicles anticipate upcoming situations, supporting driver safety and improving the overall driving experience. This layer contains the road topology, road axis geometry, and road level attributes.

2. The HD Lane Model layer contains lane topology data and lane level attributes, as well as lane geometry for center and edge lines. For positioning purposes, it is advantageous that the geometry is encoded by a piecewise linear polygon defined by shape points rather than by a functional representation, e.g., by a clothoid or a spline.

3. The HD Localization Model includes various localization markers such as poles or signposts to assist in positioning. Localization algorithms enable HD positioning by matching localization landmarks on the map, represented by 3D bounding boxes, with landmark information observed by sensors.

All this information is encoded and published by HERE in the Navigation Data Standard (NDS) format and can be used by ADAS, navigation, and AD solutions.

Creating such an HD dataset requires a data collection and maintenance approach to ensure that the HD map provides validated, highly accurate data. This high accuracy cannot yet be achieved with the perception stack of series production vehicles today. Therefore, HERE is deploying its HERE True capturing system with a suite of high-precision sensing systems. Map features that cannot be captured by sensors are obtained by HERE from a variety of sources, including road authorities, imagery providers, and other third-party vendors. The maintenance of selected HD map features is done through a large amount of anonymized vehicle and infrastructure sensor data that HERE has access to.

The complexity of creating and maintaining an HD map should not be underestimated. It provides a fundamental data layer that is used as a reference by other systems. And such a reference must be highly accurate and trustworthy. Only then can the future of autonomous driving be truly thought through and successfully advanced.

Stay tuned for our second post on this topic, when we will look at how HD positioning is achieved using visual inertial odometry algorithms to generate a precise 6-DoF pose.

Back to news →