Innovation in ACES technology (autonomous driving, connectivity, electrification, and shared/intelligent mobility) is reaching a higher pace. Around $70 billion were invested in this area in the first half of 2021 alone. E-mobility is gaining momentum as the E-Mobility Index 2021 shows – especially in Europe. In the wake of these developments, new approaches to the navigation experience are becoming increasingly important. How will we be guided to our destination in the future? How will we travel more efficiently, comfortably, and safely? In this third of our three-part blog series, we will talk about how the HD Map data from part one, and the VIO vehicle positioning of part two, is combined in NNG’s iGO Navigation SDK to support innovative navigation experiences that we can expect in 2022 and beyond.

Navigation is undoubtedly a key feature of connected vehicles, especially when they are electric. The core functions of a navigation system consist of destination selection, route planning, guidance, and map display. All these features rely on map data. To efficiently implement and roll-out navigation experiences across multiple brands and with different feature sets, the NNG iGO Navigation SDK provides the needed flexibility while allowing scale at the same time. It provides the features decoupled from the HMI (human machine interface) design and enables project-specific UI/UX development, customization, and brand-specific design. These navigation feature modules work with SD and HD map content encoded in NDS format. NNG, who has been a member of the NDS Association since 2015, built a system whose client data is kept up to date by OTA updates from NNG’s map content cloud.

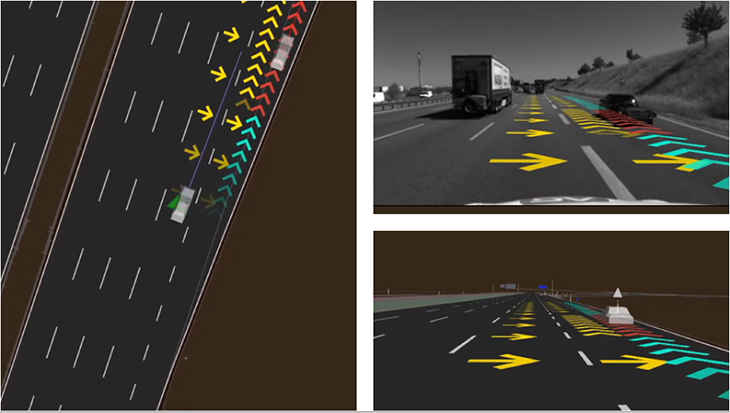

NNG’s iGO Navigation SDK allows parts of the navigation functionality, such as routing or search, to run locally on the client or online in the cloud. In addition, parts of the Navigation SDK run on the IVI system, providing data and features to an ADAS and Automated Driving (AD) ECU. To offer drivers an amazing navigation guidance experience, NNG has developed a feature that projects guidance on top of the road ahead using a head-up display. To achieve an exact overlay of visual guidance illustrations over the road and scene ahead of the driver (one that matches to the environment with the best possible position of the image overlay, its roll, pitch, and yaw) a precise 6-degrees-of-freedom (6-DoF) pose is required to accurately project navigation guidance content into an augmented reality (AR) scene.

We have already described in the previous blog post of this series how sensor information, such as from the front-facing camera, can be used and matched with landmark information stored in a digital map. Since the front-facing camera is very often connected to an automated driving ECU, the high-precision 6-DoF positioning is performed there rather than on the IVI ECU.

The overall architecture of such a 6-DoF overlay positioning system includes the use of HD map data consisting of lane and landmark information, such as that provided by HERE in NDS format, which is then transmitted from the IVI system to an AD ECU. An NDS map access library, for example from NNG, supports the positioning that matches camera sensor data with map data. This allows for the determination of a highly precise 6-DoF position that can be used by all components of a vehicle, including an AR system that overlays the road ahead with navigation guidance imagery. The AD ECU itself can also use this information for a lane-keep-assist (LKA) feature by combining the position information with HD lane information stored in the map data.

To make this all work beautifully as part of an advanced navigation guidance solution, other input information such as the calculated route path must also be considered. Based on the positioning, map data, and calculated route path, both conventional destination guidance and lane guidance can be performed. As NNG can demonstrate, their system even incorporates detected objects, for example other cars, bicycles, or pedestrians that are in the vicinity of the vehicle into the navigation guidance. For this to succeed, lane assignment of all objects, prediction of other users’ trajectories, and situation analysis are required.

Would you like to learn more about HD positioning, HD maps and their contribution to modern navigation concepts? In the first part of our three-part blog series, you can read about the numerous possibilities of HD maps. The second part deals with Visual Inertial Odometry (VIO).

You can also read more about the full topic in the free whitepaper from Navigation company NNG, HD expert HERE, and SLAM and HD specialist Artisense which explains how AR Guidance can improve future mobility making it safer and more convenient.

Back to news →